What configuration should a data scientist go for?

A KDnuggets poll indicates a 3-4 core 5-16GB Windows machine.

A StackExchange thread recommends a 16GB RAM, 1TB SSD Linux system with a GPU.

A Quora thread nudges converges around 16GB RAM.

RAM matters. Our experience is that RAM is the biggest bottleneck with large datasets. Things speed up an order of magnitude when all your processing is in-memory. A 16GB RAM is an ideal configuration. Do not go below 8GB.

Big drives. The next biggest driver is the hard disk speed. But you don’t necessarily need an SSD. If your data fits in memory, then most data access is sequential. An SSD is only ~2X faster than a regular hard disk, but much more expensive. (If you’re running a database, then an SSD makes more sense.) For hard disks, larger hard disks are also faster due to higher storage density. So prefer the 1 TB disks.

The CPU doesn’t matter. Make sure you have more cores than data intensive processes, but other than that, it’s not an issue.

However, one common theme we find is that heavy data science work happens on the cloud, not on the laptop. That’s what you need to be looking for — a good cloud environment that you can connect to.

For example, this Frontanalytics report recommends a basic laptop with long battery life, the ability multi-task (i.e. multiple cores), and a backlit keyboard for the night.

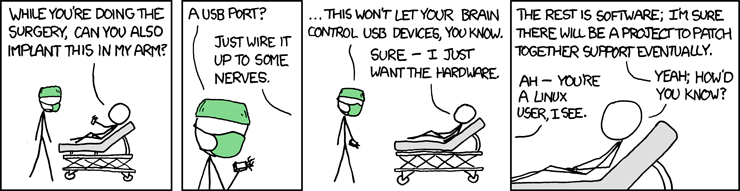

Maybe you just need USB port in your arms.